Synopsis

Leaf++ is an augmented reality system that allows to recognize the shapes of leaves using a computer vision system and to erich them with digital data.LEAF++ addresses the themes of the Third Landscape by Gilles Clément, and its objective is to create an interactive infoscape that is layered on the world thanks to augmented reality: this stratification of digital information is visually composed in a coherent way to the spaces around us, and allows disseminating information and interactive experiences which foster awareness and knowledge on the natural environment and its richness, re-integrating this information into urban environments, addressing the issues of biodiversity, sustainability, ecology, environment and bringing back to the dwellers of urban spaces the knowledge about plants, vegetables, seasonalities, places of origin, characteristics, benefits, possible uses, traditions, historical information.

LEAF++ has been used for research, education and artistic performance.

The system has been produced by FakePress and Art is Open Source in 2010 after the initial experiments of the Toys++ and Nkisi++ research projects.

Leaf++ will be officially presented at ISEA 2011, in Istanbul, but is already available for testing, research and education.

Credits

Reference Links

LEAF++

Augmented Reality for Leaves

Keywords

augmented reality, computer vision, ecology, biodiversity, realtime mapping, education, art performance, urban anthropologyAbstract

Leaf++ is an ubiquitous, interstitial information tool.

It is designed as a new “eye” that can be used to look at the natural landscape of our cities, and to see and understand the Third Landscape. [1]

Leaf++ is an augmented reality system which employs computer vision techniques to recognize plants from their leaves, and allows associating to them digital information, interactive experiences and generative aesthetic experiences whose purpose is to create a disseminated, ubiquitous, accessible form of interaction with the natural environment which allows to create a suggestive, exciting and, most of all, desirable and accessible contact with the knowledge, wisdom and awareness about the inhabitants of the natural ecosystem in our surroundings.

Leaf++ shifts our focus in the vision of urban landscapes.

It is a tool for a new vision which, through augmented reality, enables the creation of an additional layer of our visual landscape: an infoscape, an information landscape which is directly and coherently added to our vision, in a highly accessible way; a new vision in which leaves and plants come to a new visual life, as the computer vision system actively searches and highlights them, populating our view with information about their origins, living conditions, characteristics and interactions with our urban/natural ecosystems.

Leaf++ also acts as a distributed, dynamic, realtime, emergent geographer of the Third Landscape: each usage triggers a mutation of the map which is shared in realtime by all persons using Leaf++, providing a fluid cartography of the Third Landscape.

Introduction

“The Third Landscape – an undecided fragment of the Planetary Garden – indicates the sum of the spaces in which man gave up to nature in the evolution of the landscape. It regards urban and rural forgotten places, spaces for transit, industrial wastelands, swamps, moors, bogs, but also the sides of roads, rivers and train tracks. The whole of these forgotten places are reserves. De facto reserves are: unaccessible places, mountain tops, uncultivated places, deserts; Institutional reserves are: national parks, regional parks, «natural reserves»”.[1]Gilles Clément's “Planetary Garden” is one of the most suggestive answers to the mutation of the definition of urban space. Planetary Garden is to economic and urban globalization what urban gardens were to the cities of the 19th Century: the latter represented the closed or tightly schemed design of urban architecture and layout, while the former represents the connective, fluid, mutating texture of the globally interconnected city. The Planetary Garden is the garden of the global city.

The third landscape is a connective fabric composed of residual spaces that tend to take a liquid state, never preserving shape, resisting governance. Classical preservation or environmental conservation tools such as surveillance, protection and the creation of limits and borders cannot apply to the Third Landscape without destroying its characteristics, as Clément writes [2] “not property, but space for the future”. An idea of space that goes beyond the ideas of landscape as a place for identity, being used as an asset for local societies, and as a strategic tool for memory.

An idea of space that exemplifies the possibilities of the contemporary world: a multiplication of narratives; the holistic perception of ecosystems; the possibilities and richness offered by disseminated, interstitial, emergent, mutating, temporary, polyphonic environments; the end of dualistic approaches.

As John Barrell spoke about “the dark side of the landscape” [3] while pointing out the imposition of a point of view of a single social class, with Clément we could speak about a “light side”, for the Third Landscape is not an exclusive model but an inclusive one: “a shared fragment of a collective consciousness”. It is based on a planetary remix (brassage) which is at the origin of the current richness of ecosystems. [4] These dynamically mutating spaces embody the presence of multiple agencies forming the city from points of view that are architectural, political, economic, poetic, activist, industrial: new forms of nature that emerge by instantaneously creating interstitial ecosystems that flow with the story of the city, describing a realtime syncretic map that develops together with the creation of new areas for residences, industry, commerce, business, culture and entertainment, and with the death, abandonment and decay of the previous ones, as a geography of the mutation of the city.

Clément talks about the necessity of training our gaze into recognizing and understanding the Third Landscape. This requires a new possibility for vision and knowledge dissemination in urban natural environments, a renewed sense of aesthetics, and a morphed sensibility for the possibilities for interaction and communication offered by our surroundings.

Our current interaction and interrelation with the natural environment in urban spaces is mainly delegated to an “institutional” definition of borders and is rather far from the traditional knowledge of the ecosystem and its elements. Inhabitants of contemporary urban spaces loose progressively more knowledge about their environment, transforming it into a knowledge of a topology defined by administrations and by commerce. Globalization and daily routines often force human beings to recognize plants and vegetables only in terms of their use in products that are found in supermarkets, or of the trees and bushes that decorate the sides of our roads. People progressively lose contact with the knowledge about the seasonality and origins of vegetables as they have come to expect any given product at any given time in a supermarket. One study [5], among many other studies of a similar scope, provided advice to farmers in remote parts of the world encouraging the production of off-season products for export and highlighted this practice as a truly effective marketing strategy based on the documented assumption that consumers want specific products all-year-round.

Stepping outside of the supermarkets, we see that plants still remain within the great unknown as regards the majority of inhabitants of urban spaces. In cities, plants populate the periphery of our world view, living a life that is mostly aesthetic and excluding practically all forms of knowledge about their origins, characteristics, benefits and roles in the ecosystem, which remain largely hidden from the majority of citizens.

LEAF++

Leaf++ is an ubiquitous, interstitial information tool.

It is designed as a new “eye” that can be used to look at the natural landscape of our cities.

It is designed to help us see and understand the Third Landscape.

Leaf++ is an augmented reality system which employs computer vision techniques to recognize plants from their morphologic characteristics, and allows us to associate them with digital information, interactive experiences, and generative aesthetic experiences whose purpose is to create a disseminated, ubiquitous, accessible form of interaction with the natural environment which allows the realization of a suggestive, exciting and, most of all, desirable and accessible contact with the knowledge, wisdom and awareness about the inhabitants of the natural ecosystem in our surroundings.

Leaf++ shifts our focus in the vision of urban landscapes.

It is a tool for a new vision which, through augmented reality, enables the creation of an additional layer on our visual landscape: an infoscape and information landscape which is directly and coherently added to our vision; a new field of vision that is accessible with a natural gesture, by looking at the world through a mobile device which acts as a new lens on reality; a new vision in which leaves and plants come to a new visual life as the computer vision system actively searches for them and highlights them, populating our view with information about their origins, living conditions, characteristics and interactions with our urban/natural ecosystems.

Leaf++ also acts as a distributed, dynamic, real-time, emergent geographer of the Third Landscape: each vision of a member of the plant kingdom in an urban space triggers a mutation of the map which is shared in realtime by all persons using Leaf++, providing a fluid cartography of the Third Landscape. These new visions (of the plant forms seen through the computer vision, and, thus, by us while looking at the world through Leaf++ as well as that of the emergent map of the Third Landscape) are turned into an ubiquitous sensorial experience, transformed into morphing, moving images and sounds which create a state of wonder that further connects us to this new visual landscape.

Methodology

The Leaf++ project has been designed and implemented through the following methodological steps:

- initial briefing, which produced the definition of the concept;

- the choice and experimentation of several technologies which could be used to realize the concept;

- the design and implementation of several prototypes, which were used in an iterative, participatory process;

- the generalization of the best prototypal solutions into an open platform;

- the usage of the resulting platform to create two use cases, for education and artistic performance.

- “the total character of a region” (von Humboldt);

- “landscapes will deal with their totality as physical, ecological and geographical entities, integrating all natural and human (“caused”) patterns and processes ... ” (Naveh);

- “landscape as a heterogeneous land are composed of a cluster of interacting ecosystems that is repeated in similar form throughout” (Forman and Godron);

- a particular configuration of topography, vegetation cover, land use and settlement pattern which delimits some coherence of natural and cultural processes and activities (Green)

- “a piece of land which we perceive comprehensively around us, without looking closely at single components, and which looks familiar to us” (Haber).

The concept of cognitive landscape, and of its possible contaminations through technologies and the results of the more advanced contemporary research in urban anthropology, has been a fertile domain for discussion during the initial phases in which we gave shape to the concept. A cognitive landscape can be thought of as the result of the mental elaboration by every organism of the perceived surroundings. [7]

We decided to contaminate the observations found in Farina's analysis of the theory of cognitive landscapes and of the mosaic theory within Clément's idea of nomadic observation of a constantly mutating environment, by focusing on the value of being able to recognize and understand the fluid and everchanging natural ecosystem in a process that is inclusive, collaborative and disseminated.

In this mindset, we described a series of objectives, which later formed the concept for Leaf++:

- to create a tool for vision or, even more desirably, a new or mediated vision;

- to create an accessible and natural interaction metaphor, as close as possible to the practices to those which we are accustomed to; one which is easily executable by a wide range of persons across cultures, age groups, skills;

- to create an open platform, distributed as documented Open Source software, so that it will, in and of itself, create an active ecosystem of practitioners wishing to use and modify it to enable more practices and possibilities for vision, awareness, understanding, expression and ubiquitous knowledge sharing;

- to create a usable information and interaction layer that is easily hooked onto the elements of the natural environment and that is accessible through mobile devices;

- to create a process which harmoniously conforms with the processes of our vision; just as we interpret what we see geometrically, symbolically, culturally or through our memories, experiences and relationships, Leaf++ should progressively populate our mediated field of vision with aesthetics, information, knowledge, possibilities for relation, understanding and interaction, just as details progressively emerge while we look at things;

- to create an aesthetic, sensorially stimulating, cognitively suggestive experience; one which is able to trigger wonder and emotion, to inspire action and participation, to activate cultures and open dialogues.

Another of the more pressing characteristics which we wished to research was the bypassing of the limits imposed by GPS, compass and accelerometer driven augmented reality systems and to create an experience that was strongly based on (computer) vision. Furthermore, the invasiveness of the marker-based versions of AR techniques did not seem to fit in with the goals of the project.

One of the objectives which we regarded as being not only strategic but also fundamental in promoting the vision which is defined by the Leaf++ project was the requirement for openness of the technologies used and produced in the process. Due to this consideration the research team opted not to use any of the existing commercial (even if free) platforms that are currently available to perform computer vision based AR. We chose to develop our own technology and to release it for open usage to the international scientific and artistic community (the source code of all software used in Leaf++ is currently available on the project's website under a GPL3 license). The production of an open, working platform is, in fact, one of the most outstanding results of the project, and it fully supports the idea of open, accessible knowledge which we tried to enact in the natural ecosystem by engaging the making of Leaf++.

Technology

We chose to develop a mobile AR browser with the characteristics defined during the previous stage. The chosen mobile platform was developed for Apple's iPhone, mostly due to the availability of a stable development environment and for its ease of use – to satisfy the requirements in terms of accessibility and usability – and due to the availability of multiple international development groups dealing with computer vision issues such as the ones involved in the project, thus allowing us to establish an effective mutual collaboration which proved to be both effective and rewarding.

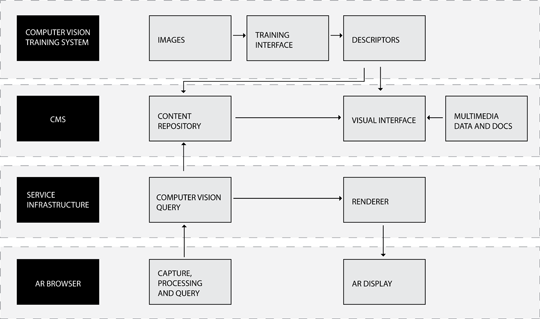

The platform which was created for Leaf++ is composed by the following elements:

- a trainable computer vision module

- a multimedia CMS (Content Management System)

- a service infrastructure

A computer vision (CV) module is used to provide image recognition features to the system. The CV module uses SURF (Speeded Up Robust Features) algorithms and techniques to identify the various types of leaves. The SURF image detection techniques and descriptors described in [8] are used in the system together with a customized version of the optimizations described in [9].

The optimizations included from [9] are integrated with other custom modifications to the basic SURF algorithms with the objective of creating a multi-layer version of the descriptors produced by the image recognition software components. The layering of the descriptors is designed to create a distance set of metrics to be easily applied to the recognition process. Using an optimized b-tree based search process the initial scans of each image benefits from the quick elimination of those leaf image models which are “too far” (with this dimension being configurable according to the set of plants which are meant to be recognized), using the first layer of the descriptors, implementing a low resolution analysis which, thus, can be performed very efficiently. With this assumption given, the next layers of the descriptors can focus on the identification of more localized characteristics of each model, being able to benefit from the previous processing phase, which has been already executed. In this image

it is possible to see three of these steps in sequence, as an example that shows how the various phases for image recognition (and the respective layers of the descriptors) take care of analyzing progressively more granular details of the image, thus implementing the possibility of establishing a b-tree optimized analysis whose branch decision scheme forwards from one level of detail to the other. In the image, the highlighted points/crosses show the features that are used at a certain level of the descriptor. B-tree led progressive analysis proceeds from level to level and focuses on image areas that are progressively smaller, being able to use the added granularity in efficient ways.

Specifically, the CV component is integrated in a system enacting the following process:

- image acquisition

- generation of feature descriptors

- classification and initial configuration of the CMS

The progress of the iterations, thus, allows to fine tune both the levels of granularity that are used to define the levels of the descriptors and the points that are used in the single levels. Images can be replaced by reuploading them into the processor, to correct undesired situations in which light conditions, perspectives or obstructions avoid the image to be processed in optimal ways.

There are several optimizations that transform this otherwise general process into a process that is optimized to setup an effective image recognition for leaves: the focus on border/edge enhancement and recognition, the focus on the symmetries that are typical in leaf shapes, parametrized according to various radiuses and offsets of symmetry, to be able to accomodating various leaf shape types.

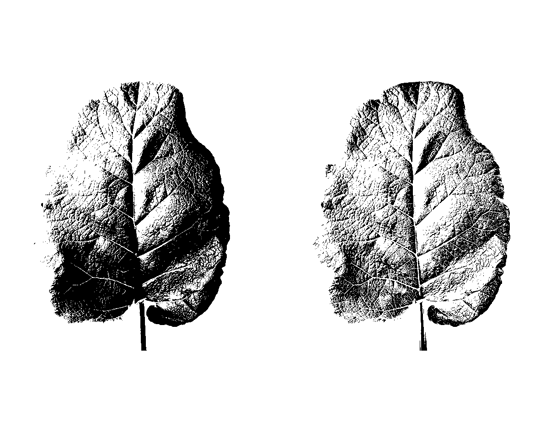

In the following image

it is possible to see two examples of the results of this process, on a single, detailed (3rd level) descriptor. On the left of the image, a non-optimized descriptor shows areas of extreme density in features identification, as well areas of relative emptiness. On the right a correctly equalized image allows for the selection of homogeneously distributed feature points, at various level of granularity (meaning that there are feature points that collaborate in describing visual features of the leaf at different scales, like borders and veins, down to the smaller texture components that are typical of the specific types of leaves.

At the end of this part of the process a series of the described multi-layer descriptors are produced, one for each type of leaf that is to be recognized. Each one of them is associated to a series of keywords establishing a taxonomy whose nodes are associated to the visual elements of the various types of leaves.

This taxonomy is used in the CMS. The CMS is implemented using a customized version of the Wordpress content management platform. The taxonomy produced in the previous phase is reproduced inside Wordpress under the form of a “customized taxonomy”.

The standard Wordpress CMS is used in Leaf++ using a series of completely personalized interfaces, designed and developed as complete and integrated set of themes and plugin components that are compliant with the standards defined by the Wordpress development community. This characteristic allows to obtain a very important result: the Leaf++ infrastructure is deployable on virtually any Wordpress-powered website, which makes it really accessible, convenient and easy to use. Any form of research that could benefit from a tool implementing augmented reality characteristics of the kind shown by Leaf++ can, thus, use the software by simply installing a standard Wordpress CMS and configuring the Leaf++ personalized theme and a series of 3 plugins. The whole software stack used in the Leaf++ system (including Wordpress and other software components included into the various modules) are distributed under a GPL3 licensing scheme that allows maximum freedom of use for all forms of research, art and non-profit.

Using the standard features of the CMS it is, thus, possible to associate multimedia content (videos, sounds, texts, documents and interactive experiences) to each part of the taxonomy and, therefore, to the visual elements of the types of leaves that have been added to the system.

Several “custom posts” definitions are included in the Leaf++ addition to the Wordpress CMS. Custom posts schemes define content assets which share a specific and fixed format, also allowing to create personalized forms through which users can create them and fill them with content.

Leaf++ uses a series of these custom post definitions, to enable users to create augmented reality content that are made out of simple text, images, sounds, videos in a format that is compatible with most smartphones, 3D objects in the COLLADA format. Dedicated input forms allow users to add these types of content blocks to the various parts of the recognized leaves, associating them to the keywords in the taxonomy and, thus, associating them to the various parts of the leaves to which each term is associated.

The service infrastructure is used to bring all parts of the system together for the usage experience. A series of software components that can be readily integrated into iPhone applications connect to the device's webcam and enact the realtime feature recognition process. When a leaf is recognized, its identification is translated into a series of terms in the custom taxonomy and relevant content is fetched over the network by interrogating the modified Wordpress CMS. The multimedia assets are then progressively shown onto the smartphone's viewfinder, coherently with the realtime onscreen position of the leaf.

Information is shown on the mobile devices using a custom renderer which is built using HTML5 and CSS3 technologies. This allows content to work on all major smartphones that are currently on the international market. It also allows for the production of one, adaptable, version of the interfaces which will then be deployed to the various types and brands of smartphones, allowing for a wider audience to use the application at a much lower cost of development.

The smartphone interfaces can integrate a custom logic to leverage the information obtained by the service layer to implement specific functions.

The first research phase for the Leaf++ project ended with two simple applications: the first dedicated to education, the second to performance arts and generative electronic music.

The education application is shown in the following images.

It is a very basic usage case scenario, that was implemented to thoroughly test all the features of the platform and as a complete, documented example application to be delivered to interested researchers who wished to experiment with this type of augmented reality system: the application recognizes one of the supported plants (there are 7 in the example application) and as soon as they enter the viewfinder of a smartphone, it uses augmented reality to progressively layer the information for that plant as contained in a database, including scientific name, place of origin, seasonality, interesting characteristics for our health, wellness, uses etcetera.

At the same time, whenever a plant is detected into a urban space, the user has the ability of marking it as an element of the Third Landscape, thus populating a time-based map that can be collaboratively compiled in this way to be able to study the presence and distribution of the Third Landscape across cities and urban agglomerates.

The application dedicated to performance arts enacts a “concert for augmented leaves”. In this version a set of leaves coming from the surroundings of the venue of the concert is used to train the Leaf++ system. Then an application on the smartphone starts, allowing the performer to use these leaves to generate music according to their visual features, border shapes, size, veins, textures. Each of these items – which are identified through the computer vision system of Leaf++ – are used as parameters of a generative music software, producing and modifying sounds, filter, notes, sequences which are directly connected with the identified visual features and their position on screen. The visual features, thus, generate the music, and they also produce the visuals for the concert, in complete synesthesia. After the first part of the concert the audience is invited for a walk in the surroundings of the concert venue, to create a walkable, disseminated, augmented reality concert, also leveraging the last feature of the application: the function that takes care of synchronizing and harmonizing the sounds of several Leaf++ systems when they are close together, using Bluetooth technology.

References

[1] Clément, Gilles. Manifesto del terzo paesaggio. Macerata: Quodlibet, 2005.

[2] Gilles Clément. Le jardin planétaire. Reconcilier l'homme et la nature, Albin MIchel, Paris 1999.

[3] Barrell, John. The dark side of the landscape: the rural poor in English painting, 1730-1840, Cambridge University Press, New York 1980.

[4] di Campli, Antonio. Review of the “Manifesto del terzo paesaggio”, architettura.it, 2005

[5] Singh, D. Production and marketing of off-season vegetables. 1st ed. New Delhi India: Mittal Publications, 1990.

[6] Farina, Almo. Principles and methods in landscape ecology. Dordrecht: Springer, 2006.

[7] Farina, Almo. Ecology, cognition and landscape linking natural and social systems. Dordrecht ; a New York: Springer, 2010.

[8] Herbert Bay, Andreas Ess, Tinne Tuytelaars, Luc Van Gool. SURF: Speeded Up Robust Features, Computer Vision and Image Understanding (CVIU), Vol. 110, No. 3, pp. 346--359, 2008

[9] Maha El Choubassi, Yi Wu. Augmented Reality on Mobile Internet Devices, http://drdobbs.com/article/print?articleId=227500133&siteSectionName= , Vision and Image Processing Research Group / FTR Intel labs